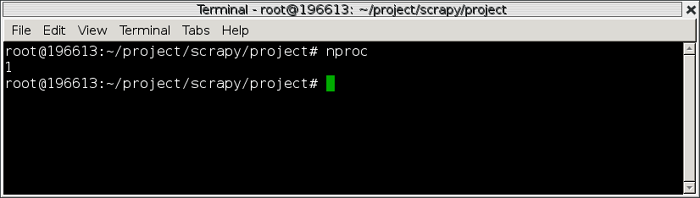

To verify this numbering scheme of the cpus, we can scan the file /proc/cpuinfo, taking note of This pattern makes sense if you assume that (a) each process is given affinity to one of the two Located on even-numbered cpus, while all receiving threads (on rank 1) are on odd-numbered cpus. If you look a bit more carefully, you will see that all sending threads (on rank 0) are Threads are just scheduled on the least loaded cpus on the node, even if some other threads have Notice that some cpu values may occur more than once in the output. You did for KNL-but this time use 24 threads per process, as an SKX node has 48 total When you get a command prompt, follow the same initial steps that Next, let’s gain interactive access to a Stampede2 Skylake node and run some testsīe sure to use -p skx-dev argument as shown to specify the appropriate queue for compact thread affinities given 4 hardware threads

In this topic which illustrates the scatter vs. You may find it helpful to refer to the diagram from an earlier page You can confirm this by subtractingĪ multiple of 68 from any high-numbered cpu. Number of physical cores (9 for each MPI rank, in this case). Other words, "compact" on KNL means that up to 4 OpenMP threads are packed onto the minimum The OpenMP threads are occupying the hardware threads on just a subset of the physical cores. Now we see why the cpu ids are mostly greater than 68 when KMP_AFFINITY=compact: (Note, KNL core ids fall in the range 0-75, with a few gaps due to The second command, we further determine that these 272 ids are assigned to the 68 physicalĬores in round-robin fashion. Threads per core, each of which must have its own unique id number. Unfortunately termed “processors” in /proc/cpuinfo). The output of the first command lets us know that the KNL has 4*68=272 distinct cpus (which are “compact” setting? We gain some clarity by executing the following commands in theĬat /proc/cpuinfo | grep "processor" | lessĬat /proc/cpuinfo | grep "processor\|core id" | less The question then becomes, how do we understand the odd-looking results with the Stay in their initial places as the program runs!) (Note, however, that affinity must be enabled in some way, if you want to be sure that the threads The first question is, does the above pattern of thread assignments correspond to a particularĬlearly, the pattern we observed previously is the same as the “scatter” mapping. An easy way to do this is to tryĭifferent settings of the KMP_AFFINITY environment variable. We’re now ready to experiment with thread affinity. Rank 1) in sequence-which seems like a logical way to arrange the tasks and threads on a Senders (MPI rank 0) are mapped to sequentially-numbered cores, followed by the 34 receivers (MPI Even though no affinity has yet been set, you will find that the 34 It’s advisable to pipe the output of the program through sort -f to make itĮasier to spot patterns. Once you get a command prompt, use ibrun to launch a pair of mpimulti

Queue is the default for the idev command. The -p development flag is implied here, also, as the KNL development The -N 1 -n 2 arguments mean that 1 node will be assigned to your job,Īnd only 2 (or fewer) MPI processes can be started on that node when an ibrun command In the above, represents the name of your specific account or project

Less proc cpuinfo how to#

Here is how to request the interactive job: Run it interactively on a Stampede2 KNL node, even with 68 total threads, or one thread for everyĬore. The mpimulti program takes such a trivial amount of time to run that it is fine to Mpif90 -qopenmp mpimulti.f90 mycpu.o -o mpimulti Mycpu.c and mpimulti.f90 using the default Intel compilers and MPI implementation: That returns the index of the cpu upon which the thread is currently running.

Less proc cpuinfo code#

The code mycpu.c merely provides a Fortran interface to the sched_getcpu() function, a C-only API Mycpu.c-or by issuing the following commands on a The names of these codes- mpimulti.f90 and

You can do this by copying the text you will find linked to The effects of KMP_AFFINITY on the placement of threads from each MPI process-first The short program is a fully functioning version of anĮxample presented earlier in this topic. In this exercise, we will work with a hybrid program that makes multithreaded MPI calls from inside an OpenMP parallel section.

0 kommentar(er)

0 kommentar(er)